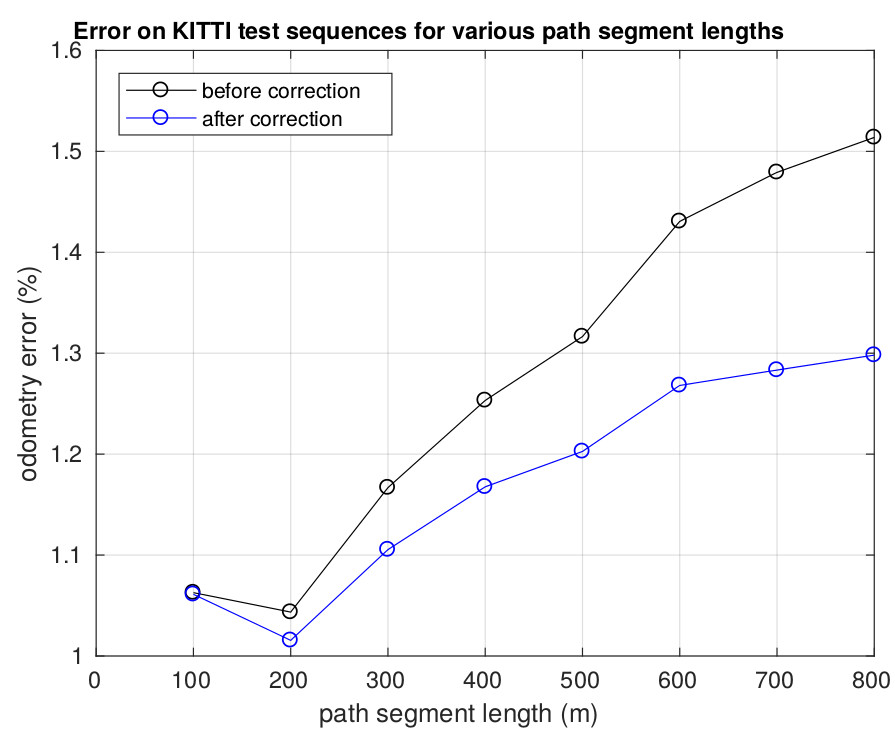

This paper presents a novel technique to correct for bias in a classical estimator using a learning approach. We apply a learned bias correction to a lidar-only motion estimation pipeline. Our technique trains a Gaussian process (GP) regression model using data with ground truth. The inputs to the model are high-level features derived from the geometry of the point-clouds, and the outputs are the predicted biases between poses computed by the estimator and the ground truth. The predicted biases are applied as a correction to the poses computed by the estimator. Our technique is evaluated on over 50 km of lidar data, including the KITTI odometry benchmark and lidar datasets collected around the University of Toronto campus. After applying the learned bias correction, we obtained significant improvements to lidar odometry in all datasets tested. We achieved around 10 % reduction in errors on all datasets from an already accurate lidar odometry algorithm, at the expense of only less than 1 % increase in computational cost at run-time.

Contributions

- Introduction of a novel technique to directly correct for the output of a classical state estimator using a learned bias correction

- Demonstration of how scene geometry can be used to model biases in motion estimation

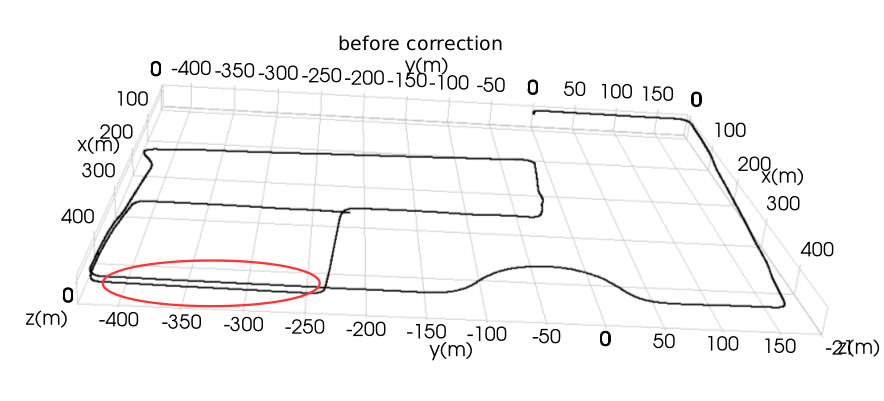

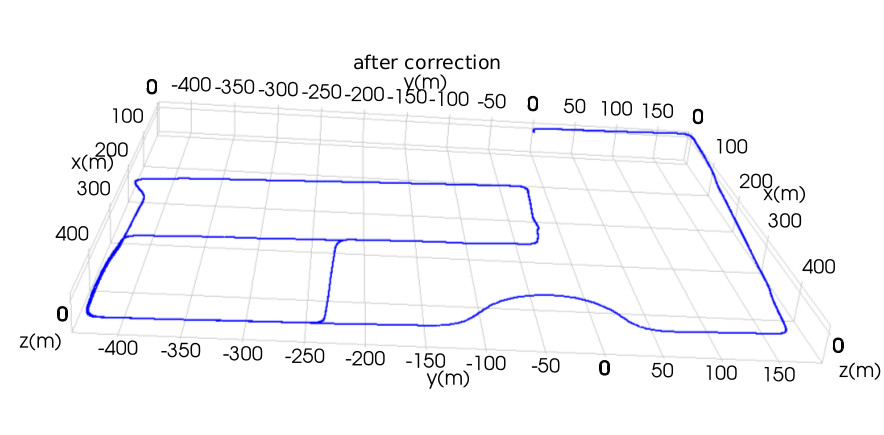

Results in Images

If you’re curious about what those images represent, then you should read the full paper.

Reference

(missing reference)